Rendering in Blender can sometimes feel endless, especially when working on heavy projects with Cycles. However, there is a solution that can make your workflow much faster: blender distributed rendering. With this method, you can cut your render times in half or even more by using the power of multiple GPUs or multiple machines.

What is Blender Distributed Rendering?

In simple terms, distributed rendering means splitting a render job into smaller parts and letting multiple GPUs or computers work on it at the same time. Instead of one device carrying the whole burden, several devices share the load. As a result, the final render finishes much faster.

For example, if one GPU takes 20 minutes to render your scene, then two GPUs could reduce the time to around 10 minutes. And with four GPUs, you can achieve even greater speed.

Why Use Distributed Rendering in Blender?

There are many reasons why artists and studios turn to blender distributed rendering.

First, rendering with a single GPU or CPU is often too slow, especially when working with realistic lighting, complex textures, or high-poly models.

Second, distributed rendering allows you to keep working while the render continues in the background. Therefore, you don’t need to waste hours waiting for the results.

Third, this method helps avoid system lags. With multiple GPUs, one can handle rendering tasks while another keeps your computer responsive.

Finally, distributed rendering gives you flexibility. You can choose between scaling up with multiple GPUs in one machine or scaling out by connecting multiple computers in a render farm.

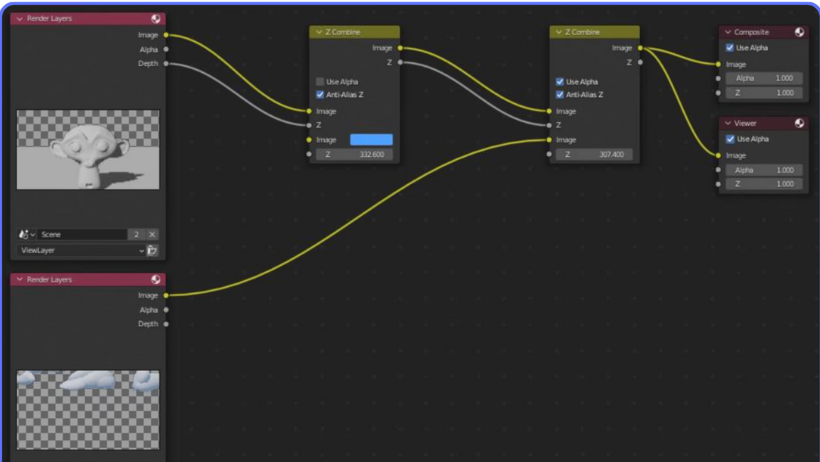

Multi-GPU Rendering in Blender

Blender supports multi-GPU rendering with Cycles, and the results are impressive. For instance, when you add a second GPU, each device works on separate tiles of your render. Consequently, the total render time is almost cut in half.

Moreover, you don’t have to worry about performance drops after adding more GPUs. Cycles scales very well, even when you use four or more cards. In other words, the more GPUs you add, the faster the rendering will be.

Handling Memory with NVLink

One common problem in GPU rendering is running out of VRAM. If your scene is too large, you might face memory errors. Luckily, NVIDIA’s NVLink technology solves this issue.

With NVLink, multiple GPUs can share their memory and act as one powerful unit. For example, two RTX 3090 cards with 24 GB VRAM each can be combined to give you a massive 48 GB of usable memory. Therefore, even the heaviest architectural or animation projects can be rendered smoothly.

The Smartest Choice: Cloud Distributed Rendering

While setting up your own multi-GPU system is possible, it is expensive and difficult to maintain. Instead, many artists now choose cloud-based blender distributed rendering services like 3S Cloud Render Farm.

Here’s why:

- You get instant access to high-performance servers with multiple GPUs.

- You don’t need to invest thousands of dollars in hardware.

- You can scale your rendering power anytime, depending on the size of your project.

- You only pay for what you use, which is very cost-effective.

In short, cloud rendering allows you to enjoy all the benefits of distributed rendering without the headaches of managing hardware.

Conclusion

Blender is already a powerful tool for 3D artists, but rendering can slow down the creative process. Thankfully, with blender distributed rendering, you can cut render times in half, keep your workflow smooth, and finish projects much faster.

Whether you use multi-GPU setups at home or leverage the flexibility of 3S Cloud Render Farm, distributed rendering is the key to speeding up your Blender projects.